Using Product Analytics Insights for Mobile A/B Testing and Conversion Rate Optimization

Product analytics, as a pivotal component in the modern digital business ecosystem, empowers organizations with data-driven insights to make informed decisions and craft superior user experiences. Particularly, A/B testing and conversion rate optimization (CRO) are critical techniques for fine-tuning mobile applications. This article delves into the technical aspects of implementing and analyzing these strategies, specifically within a mobile context.

Mobile A/B Testing: A Prerequisite to Optimization

A/B testing, also known as split or bucket testing, involves comparing two versions of a product feature to determine which performs better. On mobile platforms, the execution of these tests requires a slightly different approach due to factors such as different operating systems (OS), screen sizes, and user behaviors.

Implementing A/B testing within a mobile environment can be managed via various methods, including:

1. Server-Side Testing

In server-side A/B testing, generating different experiences or versions of your application happens on the server that hosts your app, rather than on the user's device (client-side). This type of testing is particularly valuable when you are testing changes that significantly alter your app's core functionality, databases, or back-end services.

Consider a simplified example where an e-commerce mobile application company wants to experiment with its product recommendation algorithm to enhance user engagement and sales. They design two versions of the algorithm:

- Algorithm A (the control group) - Presenting users with product recommendations based on their recent searches

- Algorithm B (the test group) - Used to recommend products based on recent searches and purchasing history

In server-side A/B testing, when a user opens the app, a request is sent to the server to fetch the product recommendations. The server decides whether this particular user is part of the control group or the test group, then runs the corresponding algorithm to generate the product recommendations. The results are then sent back to the mobile app to be displayed to the user.

This process occurs behind the scenes, with no discernible difference in loading time or performance from the user's perspective. In fact, the user is unaware of the test - they simply see a list of product recommendations.

In terms of managing these tests, several points require careful attention:

- User Assignment: Each user should consistently get the same version of the feature throughout the test to prevent confounding your results. In our example, if a user initially receives recommendations from Algorithm A, they should continue to get Algorithm A recommendations throughout the test period. This can be achieved using persistent user identifiers and storing the assigned group information in a database.

- Performance Monitoring: As server-side testing often involves changes to your back-end services, it's crucial to monitor server performance to identify and address any potential slowdowns or issues quickly.

- Rollback Mechanisms: If a new feature causes problems, you need a plan to roll back changes quickly to minimize user disruption. In our example, if Algorithm B starts causing server performance issues, the company needs a mechanism to switch all users back to Algorithm A swiftly.

- Statistical Significance: As with client-side testing, you should only conclude the test when you have collected enough data to achieve statistical significance.

Server-side testing gives you robust control and flexibility to test significant changes in your app, but its successful implementation demands careful planning and monitoring

2. Client-Side Testing

Client-side A/B testing, unlike server-side testing, involves modifying and serving different versions of the application or feature directly from the user's device. This process leverages the computational resources of the user's device (client) to implement the different variations of the feature or UI element being tested.

Client-side A/B testing is particularly advantageous for UI/UX changes or non-complex feature tests that don't involve server-side components. These changes could include elements like button colors, text copy, image placements, or other frontend design elements.

Consider an example: a music streaming app is exploring ways to increase user engagement. They hypothesize that changing the color and position of the 'Play' button on the home screen could lead to increased plays. They decide to test two variations: Variation A (control) leaves the 'Play' button as it is, while Variation B (test) changes the 'Play' button's color and moves it to a more prominent position on the screen.

The key aspects to consider during client-side testing include:

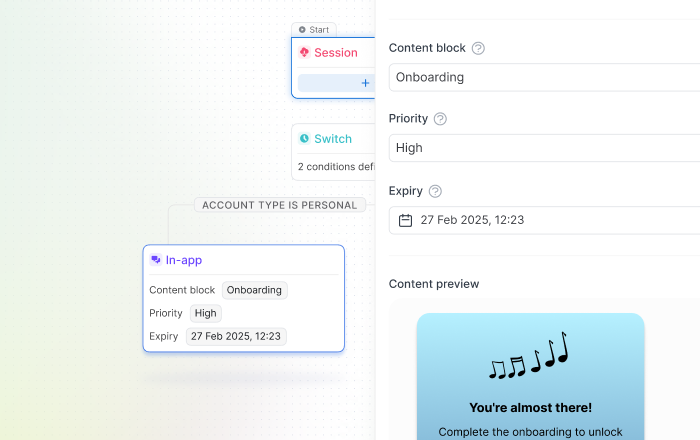

- Feature Flags: These are a key component of client-side testing. A feature flag, also known as a feature toggle, allows you to turn features of your application on or off without deploying new code. This means you can ship Variation B to the user's device but keep it 'turned off' until you're ready to include the user in the test.

- Randomization: To avoid selection bias and ensure the validity of the test, users need to be randomly assigned to either the control or the test group. The randomization algorithm needs to be consistent, ensuring a user sees the same version of the app each time they open it during the testing period.

- Performance Implications: Since client-side A/B testing utilizes the user's device computational resources, developers need to ensure that the test does not significantly impact the app's performance or the device's resources. However, there is a possibility of performance problem, but usually client side changes are more of UI changes and do not pose performance implications, but they need to be more thoroughly tested to make sure UI works and behaves correctly in all variants. Because it is important from user perspective too, if UI breaks something, it taints the experiment data too.

- Analytics: Since different versions are served directly from the user's device, robust analytics tracking must be implemented to collect data about user interactions for each variant correctly. Specifically, to track users who actually saw or interacted with the changed UI should be counted into experiment, not simply all users who used the app during experiment period. Which is tied to some kind of goal they need to achieve to count as completing experiment successfully. For instance, if we want to optimize for purchase, then users who viewed or interacted with UI and made the purchase would succeed in experiment, and users who viewed or interacted but did not make purchase would fail, and based on the statistical significance is calculated.

While client-side testing offers great flexibility for testing UI changes and provides quicker implementation cycles, it is essential to conduct these tests with careful planning, particularly around feature flag management, randomization, and analytics tracking. In combination with server-side testing, client-side testing forms a comprehensive approach to optimizing your mobile app based on real user data.

Both methods necessitate robust frameworks or platforms capable of handling user segmentation, experiment distribution, and data collection. Moreover, creating a statistically valid hypothesis, defining the key performance indicators (KPIs), and ensuring the test's statistical significance is paramount for a successful A/B test.

Conversion Rate Optimization: Refining User Experience

Conversion rate optimization for mobile applications involves many variables - from the simplicity of the onboarding process to the speed of in-app transactions. By leveraging data derived from A/B tests, businesses can iteratively improve user experiences, driving higher engagement and conversion rates.

Key techniques for mobile CRO include:

Bettering Mobile User Interface (UI)

The mobile UI is the visual medium through which users interact with an application. It's the gateway to the user experience and a crucial component of CRO. Enhancing the mobile UI focuses on creating an aesthetically pleasing, intuitive, and efficient design.

- Button Sizes and Spacing: The thumb is the primary tool for navigation on mobile, so button sizes and spacing should accommodate comfortable thumb movements. According to Apple's Human Interface Guidelines, the minimum target size should be 44 pixels by 44 pixels.

- Fonts: Fonts should be legible and simple. It's advisable to use a maximum of two to three different fonts in varying sizes to denote hierarchy and importance.

- Color Schemes: Colors can impact user behavior significantly. For instance, strong contrast between the text and background color can improve readability, while color psychology can provoke specific user emotions or actions.

- Icons and Images: These should be recognizable and consistent throughout the application. Custom iconography can reinforce brand identity but should still lean on established norms for user familiarity.

Improving Mobile User Experience (UX)

UX is an umbrella term that encapsulates all aspects of a user's interaction with the app, including UI, but also broader elements like performance, utility, and ergonomics.

- Simplicity of Navigation: Users should be able to reach their desired screen in the fewest steps possible. A nested information architecture, a bottom navigation bar, or a hamburger menu can help facilitate this.

- Clarity of Instructions: Any instructions or prompts should be concise, clear, and plain language. Unclear instructions can lead to user frustration and app abandonment.

- Ease of Completing Desired Actions: Desired actions should be as effortless as possible. For example, forms should be auto-populated where possible, and checkout processes should be streamlined to reduce cart abandonment.

- Optimizing for Various Mobile Platforms: Mobile platforms vary in terms of operating systems (iOS, Android), screen sizes, and device capabilities. Ensuring a seamless and consistent experience across these different platforms is critical.

- Responsive Design: The app should dynamically adjust to different screen sizes and orientations. This can be achieved through flexible layouts and density-independent units in the app's design.

- Platform-Specific Design: Different platforms have different design philosophies and guidelines (Material Design for Android and Human Interface Guidelines for iOS). Adhering to these ensures the app feels native and intuitive to users on each platform.

- Testing on Different Devices: From high-end devices to lower-end models, from small to large screens, the app should be thoroughly tested across a broad range of devices to ensure consistency and identify any device-specific bugs or issues.

Related topic: Navigating the Future: Mobile Analytics for Next-Gen Devices

Advanced Analytics: An Instrument for Deeper Insights

A deep dive into A/B testing and CRO would be incomplete without acknowledging the role of advanced analytics. Machine learning (ML) models, for example, can be used to analyze large datasets generated from these tests, uncovering hidden patterns and providing a deeper understanding of user behavior.

Predictive analytics is another powerful tool. By leveraging user data, businesses can predict future user behaviors and tailor their strategies accordingly. For instance, an algorithm could identify the likelihood of a user converting after taking certain actions within the app, enabling preemptive modifications to the app's features or workflows to boost conversions.

Related topic: The Role of AI and Machine Learning in Future Product Analytics

Product Analytics Takeaways on Mobile A/B Testing and CRO

A/B testing and conversion rate optimization are key pillars of product analytics in the mobile landscape. By implementing these strategies and leveraging advanced analytics, businesses can create data-driven improvements that enhance the user experience and maximize conversions. It's a meticulous and iterative process requiring technical proficiency, a strong understanding of statistics, and a keen sense of user psychology. However, the rewards for businesses that successfully navigate this path can be game-changing.

In conclusion, A/B testing and conversion rate optimization are critical to elevating your mobile app's performance. With Countly's comprehensive analytics platform, these powerful techniques are at your fingertips.